-

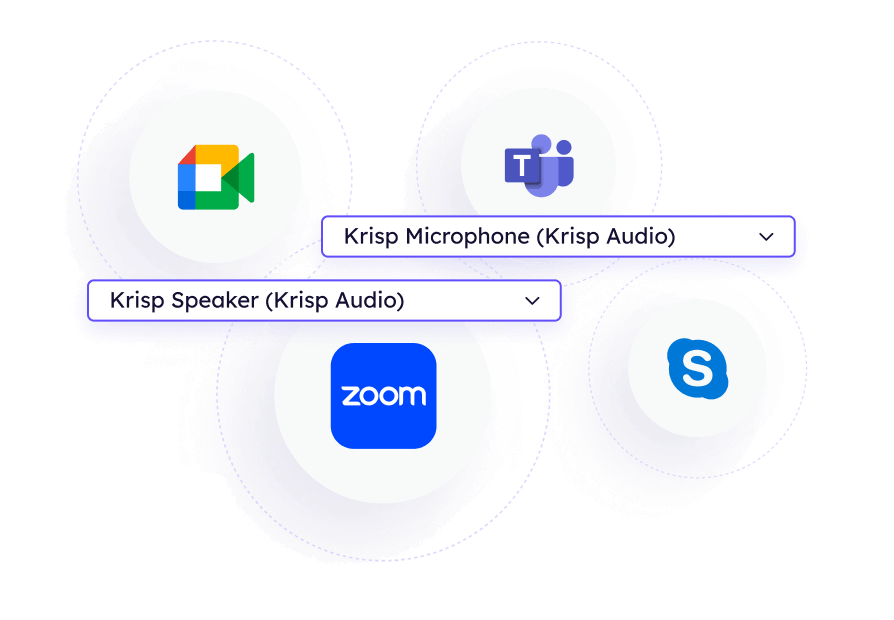

Works with any conferencing app

-

Works with all call center platforms

-

Works with any headphone and device

-

Features

For individuals and teams

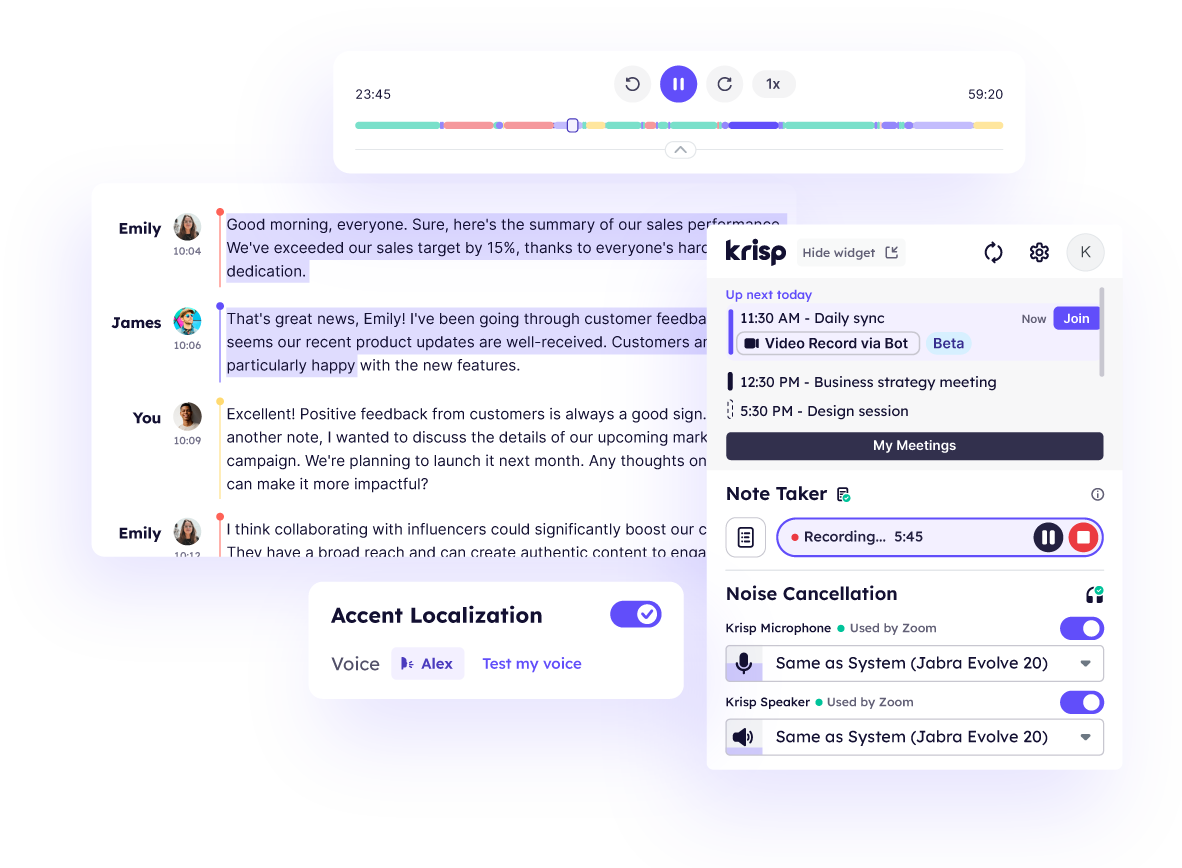

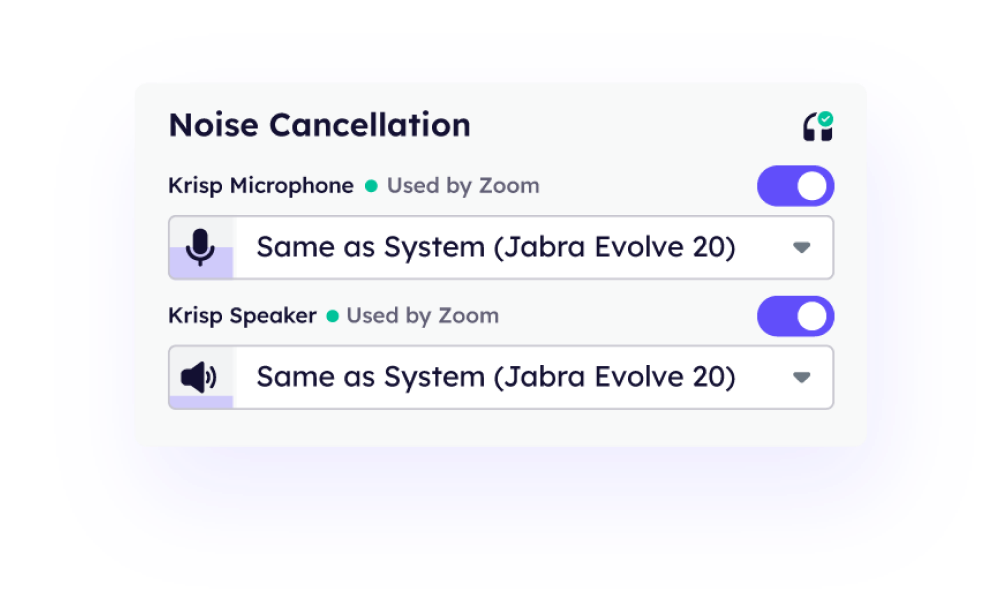

- AI Noise Cancellation Removes background noises, voices and echoes from online meetings.

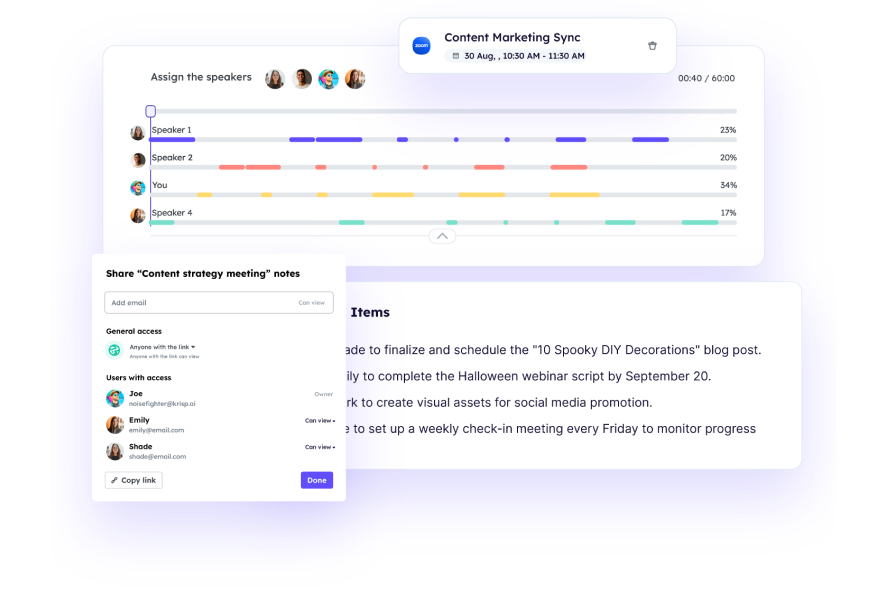

- Meeting Transcription Transcribes meetings and calls in real-time for individuals and teams.

- AI Meeting Notes and Summary Generates meeting notes, summaries and action items from meetings.

- Meeting Recording Automatically records meetings across all communication apps.

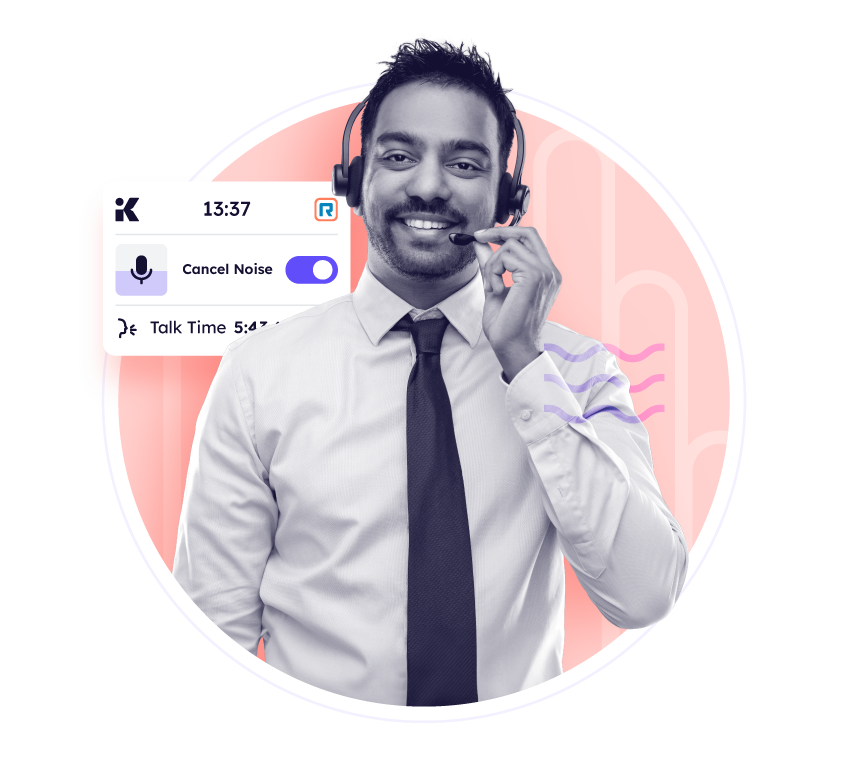

For enterprise and call centers- AI Noise Cancellation Removes background noises, voices and echoes from customer calls.

- AI Accent Localization Converts agents' accents in real-time to the customer's native accent for clearer communication.

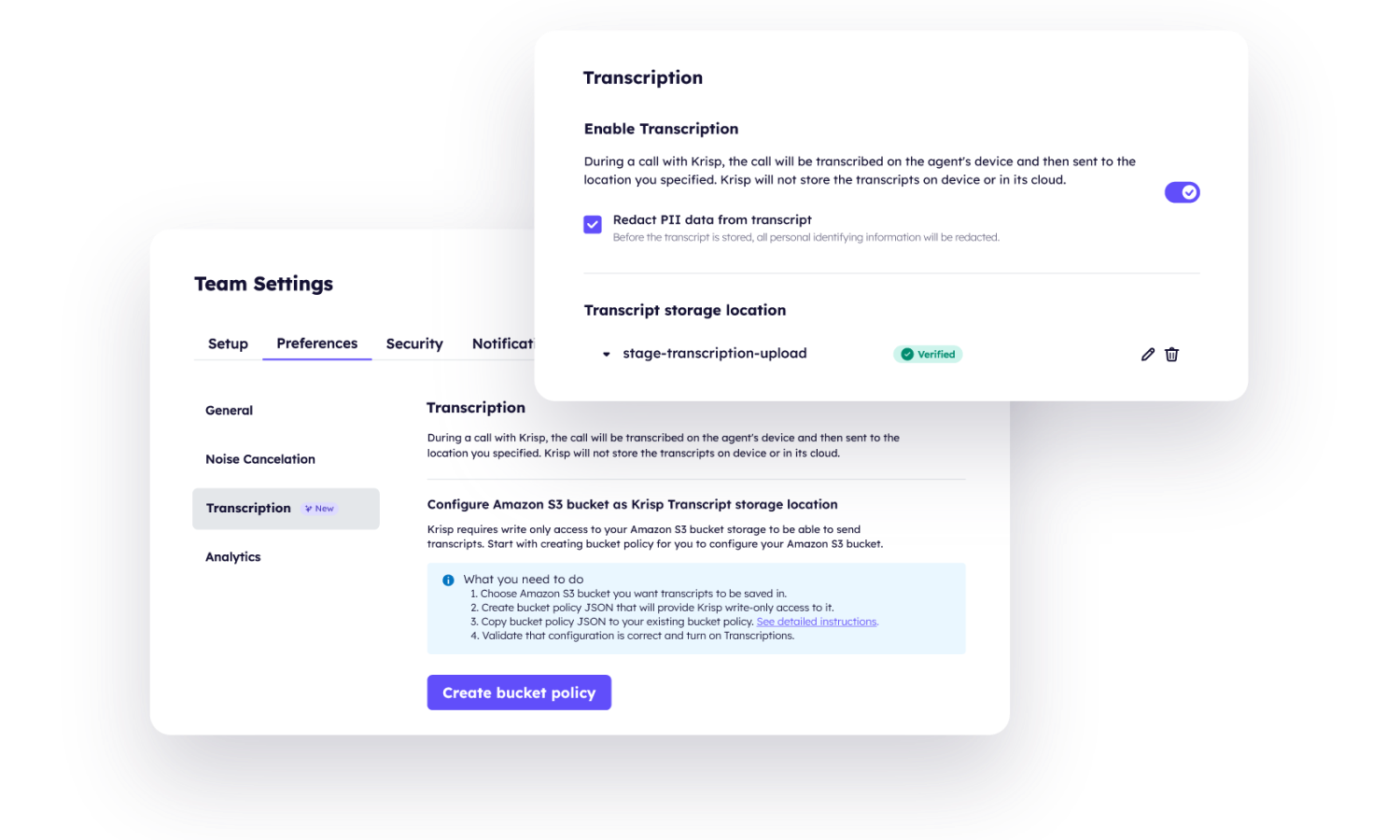

- Call Center Transcription Securely transcribes the agent-customer conversations on device and in real time.

-

Use cases

- Call Center (BPO) Elevated CX and AX accompanied with the AI-powered Noise Cancellation, Transcription, etc.

- Call Center (Enterprise) Award-winning Voice AI solutions designed to elevate customer and agent experience.

- Professional Services Clear calls, no distractions: let AI transcribe and summarize your consultations.

- Sales and Success Focus on selling while our AI maximizes your voice quality and takes meeting notes.

- Hybrid Work Elevating hybrid teams with clear communication and solid accountability.

- SDK and developers Integrate Krisp’s award winning voice AI technologies into your product.

- Individuals and Freelancers No distractions: clear calls accompanied by transcriptions and meeting notes.

- Pricing

- Download

- How it works?